Battling Numerical Instability: Stop Blaming the CPU

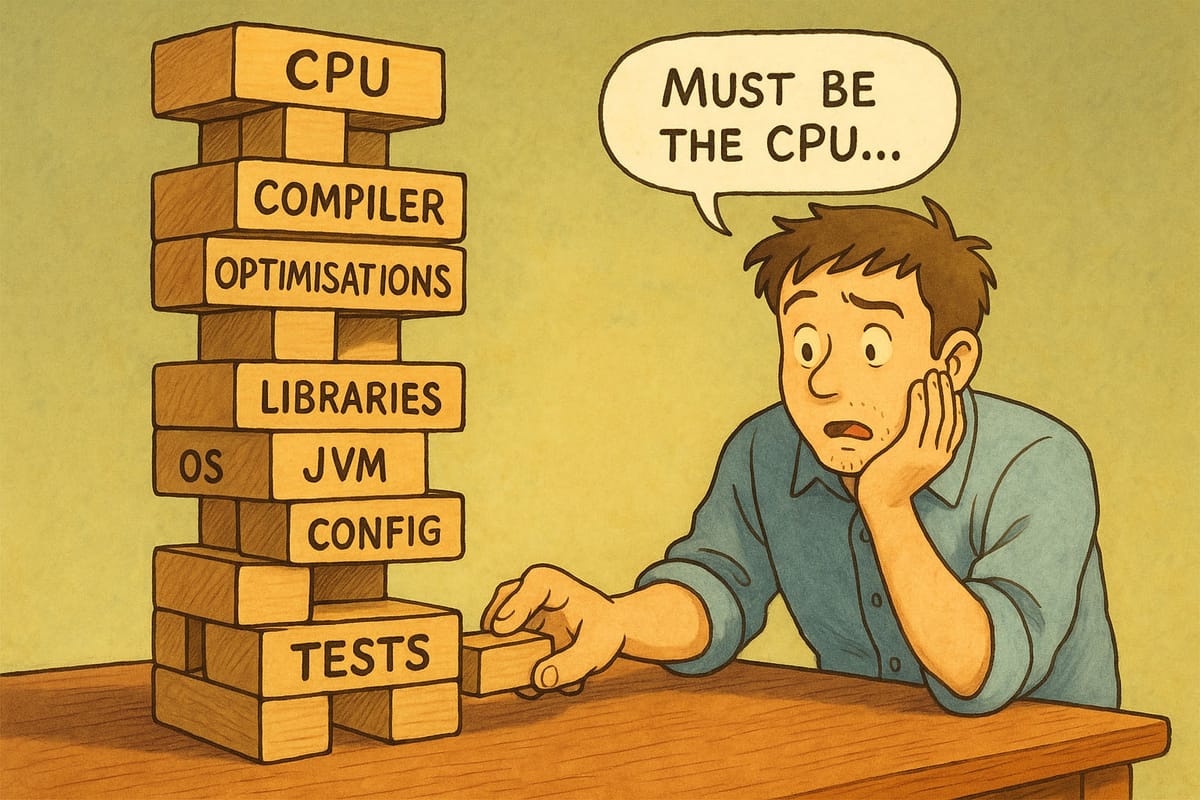

Before you blame the new CPU for breaking your VaR, maybe ask what your compiler flags and math libraries have been up to after hours.

You ran your code on another CPU and now the numbers don’t match. You’re probably swearing at whoever made the CPU for not respecting IEEE 754 float specifications, right? You might want to take a look at your own code first though…

Ok granted, it’s true that there are a small number of instructions that are implemented differently between Intel and AMD, but it really is a small number. In fact I can list them for you RCPPS and RSQRTPS. Strictly speaking we can add all the x87 instructions too but if you’re still using those you probably have bigger problems to solve right now anyway 😁

If you’re worried you might be using one of those instructions or just want to check you aren’t you can use Intel’s PIN tool. We even have a special tool to do just that

Chances are though, that your problems live elsewhere. You see there are a number of sources of numerical instability, and my bet is that you changed not only the CPU but a bunch of the other candidates all at the same time and hoped for the best. That was always going to be a painful choice.

Everything from the compiler flags you choose and the optimisations they result in, to the math libraries you select, to the version of your runtimes such as libC or even OpenJDK and the parameters you pass to it can change your output. Yes. Really.

You can just as easily encounter numerical instability by upgrading your operating system (I’ve seen at least one case of this) as you can by swapping your CPU.

Without rigorous multi platform build and test pipelines that operate on a minimal but comprehensive set of test data to validate your quant libraries you’re setting yourself up for an expensive and needlessly frustrating exercise to achieve CPU portability. (I know even doing that in many organisations may feel somewhat Sisyphean).

That’s not to say that it can’t be done. Google now builds and tests over 100,000 applications automatically across x86 and ARM. They started in April last year at the release of their Axion processor.

Why bother? You mean apart from all the geopolitical reasons, the rise of ARM, RISC-V on the horizon and the soap opera that is chip manufacturing at the moment? Ah well it probably will save you a ton of money that’s why. See the previous post for more on that.