Call That Big Data?

Think you deal with big data? Nah. This is big data!

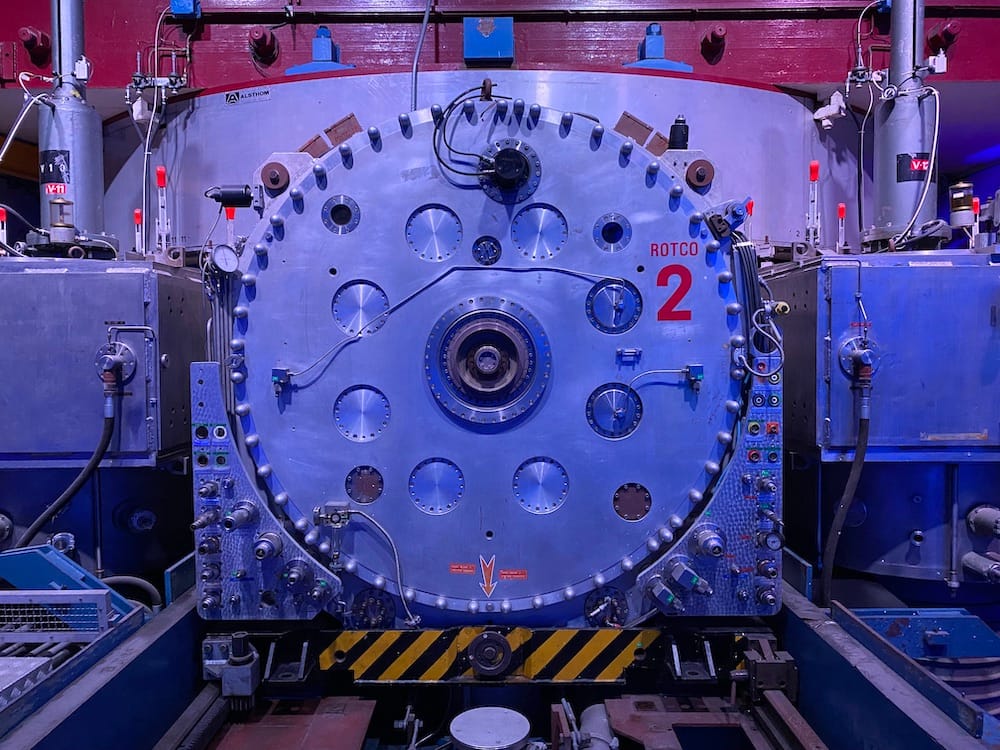

I mentioned that I was at CERN last week and they casually dropped the fact that they use half a million CPUs to process the data collected by the Large Hadron Collider.

Well, I went back a day later and managed to get onto a guided tour that included Atlas, one of the detectors at the LHC. A single detector in the array that makes up Atlas generates around 10 Mb of data per second. Atlas alone generates in the region of 1 to 5 petabytes per second.

Yes, you read that correctly, petabytes per second. Overall, the four major detectors at the LHC generate approximately 10 Pb/s of data.

To put that number into perspective, YouTube only has to deal with about 3Tb of data per minute. CERN generates over 60,000 times more data every second than the entire population of YouTubers with their HD video cameras, iPhones and GoPros.

Suddenly that half a million CPU count is starting to make sense. Really puts into scale what I’ve been calling big data with my FSI customers!

Luckily, only a tiny proportion of the data is kept. Only 1 in 100,000 data points is kept after an initial analysis. Which happens with 25nanoseconds. Yes nanoseconds. There’s a particle collision every 25 nanoseconds so the decision is made in that timeframe. Take that FSI HFT folk. Reckon your code is fast? Not CERN fast!

Would you look at that, CERN and YouTube have similar level of useful data that is generated too 🤣