Comparing Cloud VM Performance Across Architectures

Is it worth making your code portable across CPU architectures when running on the cloud? 3 Clouds, 3 CPU Architectures, 3,000 Hours of Benchmarks. Here’s the Verdict

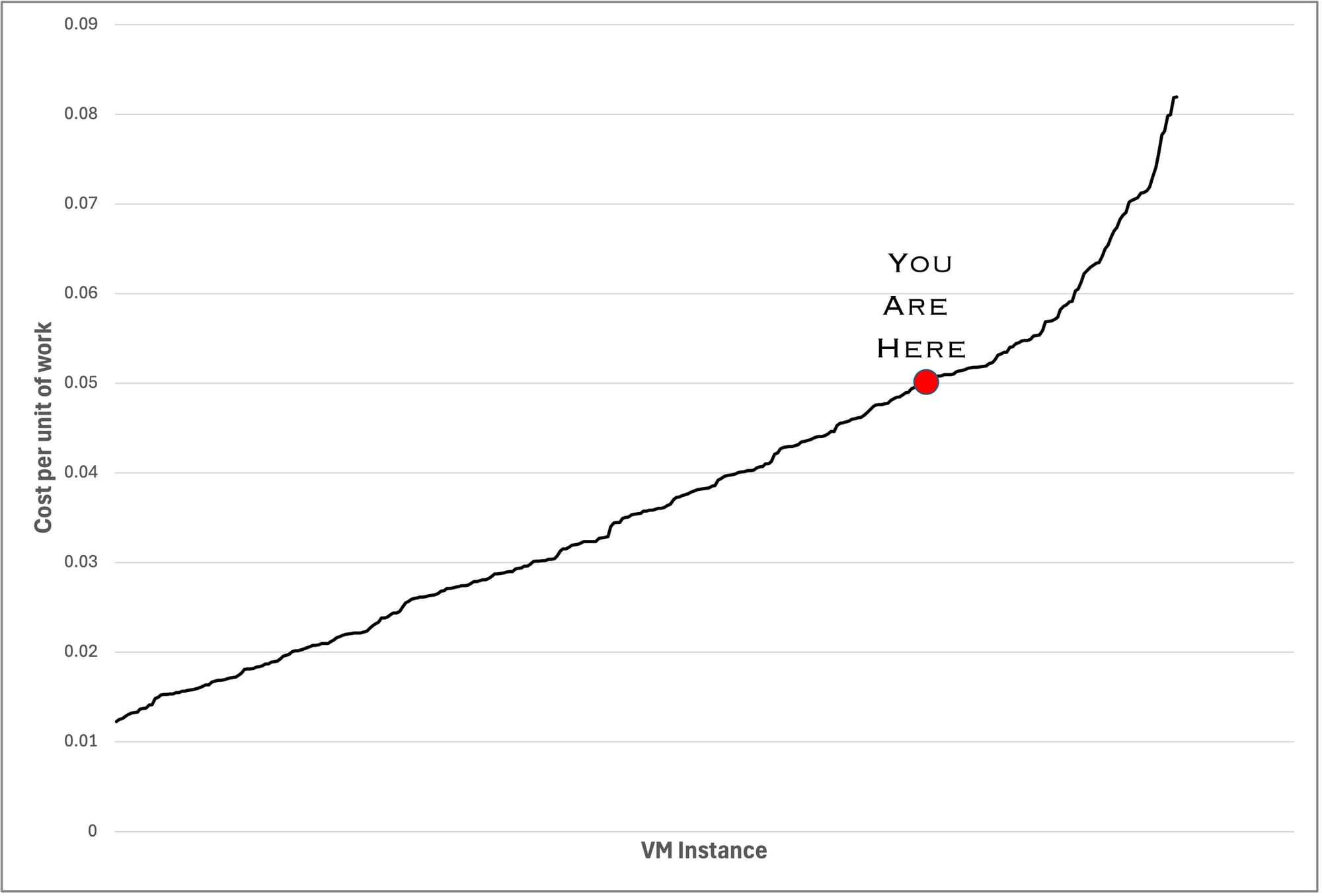

Remember this graph I teased you with?

I’ll remind you; it came from the first slide of a presentation I recently delivered at Quant Minds and shows the performance adjusted cost of a selection of virtual machines in the cloud. I say a selection, we actually ran the latest three generations of AMD, Intel and all varieties of ARM CPUs. That meant over 3000 hours of benchmark runs.

The presentation focused on resolving problems of numerical stability in financial services to enable portability across CPU architectures and manufacturers. But I had a captive audience and I’m sure they were all far too polite to get up and leave. I’m under no illusion that you, on the other hand, won’t just scroll past this if I start talking about exponents, mantissas and whether or not addition is associative 😁

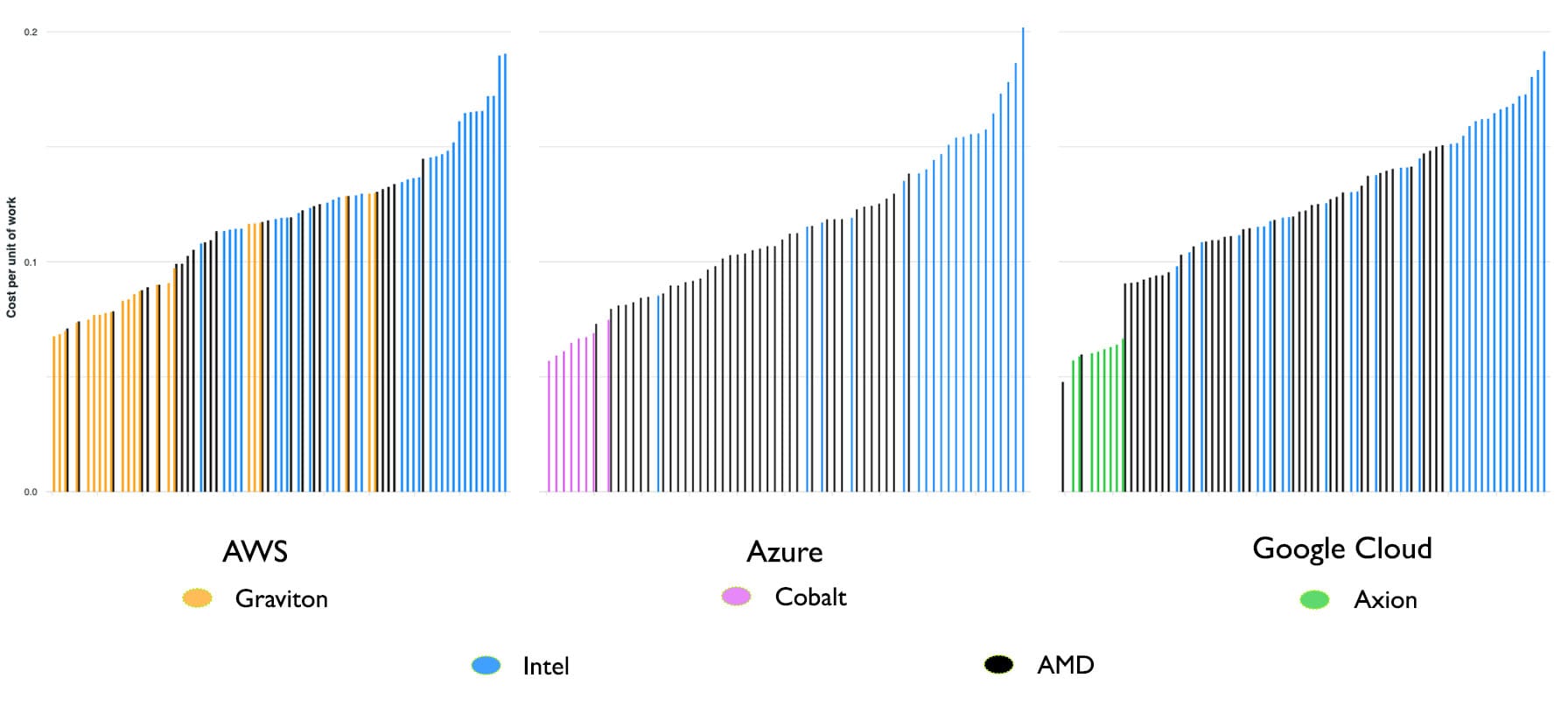

So, here’s the bait. The second picture shows that same data but this time coloured to show the different CPU types and split by the three major cloud providers.

Prices were current as of 09 Nov so a little out of date now, the benchmark used here was financial services specific: COREx. Though we did run other benchmarks too which we could use to determine performance adjusted pricing.

Each line represents one VM of a particular type and size and we ran multiple sizes of VMs of each type (and across multiple regions). The data is restricted to on demand pricing for VMs with at least 4GB of RAM per vCPU.

Mostly, you can draw your own conclusions (share them too?) but the TLDR is: if you have an large existing x86 only codebase make sure you can use AMD CPUs. If you’re writing brand new code, make sure you can run on ARM too.

We are still working on turning that presentation into a paper… bear with us!