Financial Risk Analytics on GPUs 2.0

Will running financial risk on GPUs be more of a success this time round?

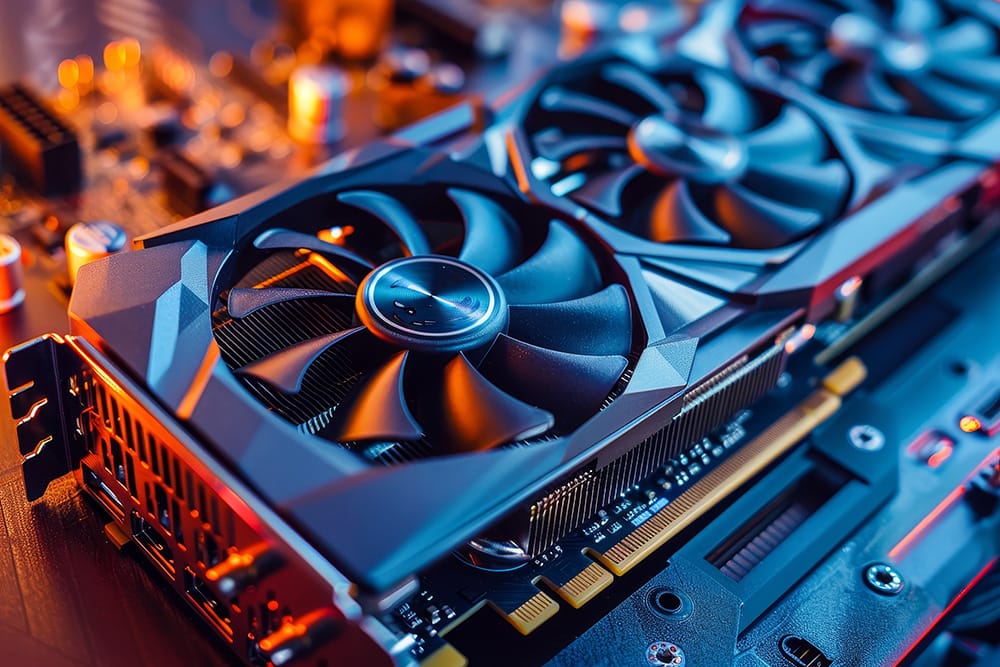

NVIDIA seems to be king of hill right now. Stock price? AI? Everyone scrambling to get hold of their GPUs? Check, check and check!

In the last few weeks, we’ve seen a couple of stories that relate to the use of GPUs not by the insatiable gluttonous computivore that is AI but for financial risk analytics.

Acadia released an update to Open Risk Engine to allow it to run on GPUs:

And NVIDIA themselves put out an article showcasing how to run financial risk models on GPUs using only ISO C++. No CUDA!

Quick aside, wouldn’t it have been awesome if the timing was better and the folks at ORE had adopted the ISO C++ approach!

Our clients too are increasingly expressing, at the very least curiosity, if not a desire to adopt GPU compute for financial risk analytics.

But we’ve been here before. Not that long ago either, circa 2010 perhaps. CUDA has been in the wild for a little while. There was a great deal of hype around accelerated compute times running on GPU with CUDA. The conversation usually started at claims of 50x speed improvements.

I was involved in just such a project, migrating a risk system to run on GPU. It was great work. Symphony didn’t yet support scheduling across GPUs so we had to write custom scheduling code. The GPU compute migration itself threw up many unexpected challenges. Oh and I’m sure anyone involved in such work (even today) loves the constant reconciliation of numbers between CPU and GPU calculated output and the testing overheads it brings with it. 😀

The reality though, and I’ve come across several systems that migrated to GPUs since then, was more like a 5x speed up. I guess that’s still good but when it was sold as being ten times that much it felt like anti climatic.

I haven’t yet managed to see any genuinely conclusive cost comparisons either. My gut feel though, it is at best on par.

So, if you’re getting swept up by the current hype cycle around GPU compute I’d certainly advise taking a measured approach. It’s not 2010. You’re not limited to the compute you have on prem. Busting to cloud is real thing. CPUs (especially AMD) have also come a very long way. There might be cheaper ways to run your risk faster.

I need to take closer look at ORE on GPU, but assuming it is possible, we will update COREx, the financial risk HPC benchmark, to be able to run on GPU. The inevitable cloud VM performance and cost comparisons will follow …