Improving Utilisation in HPC & AI – Part 5

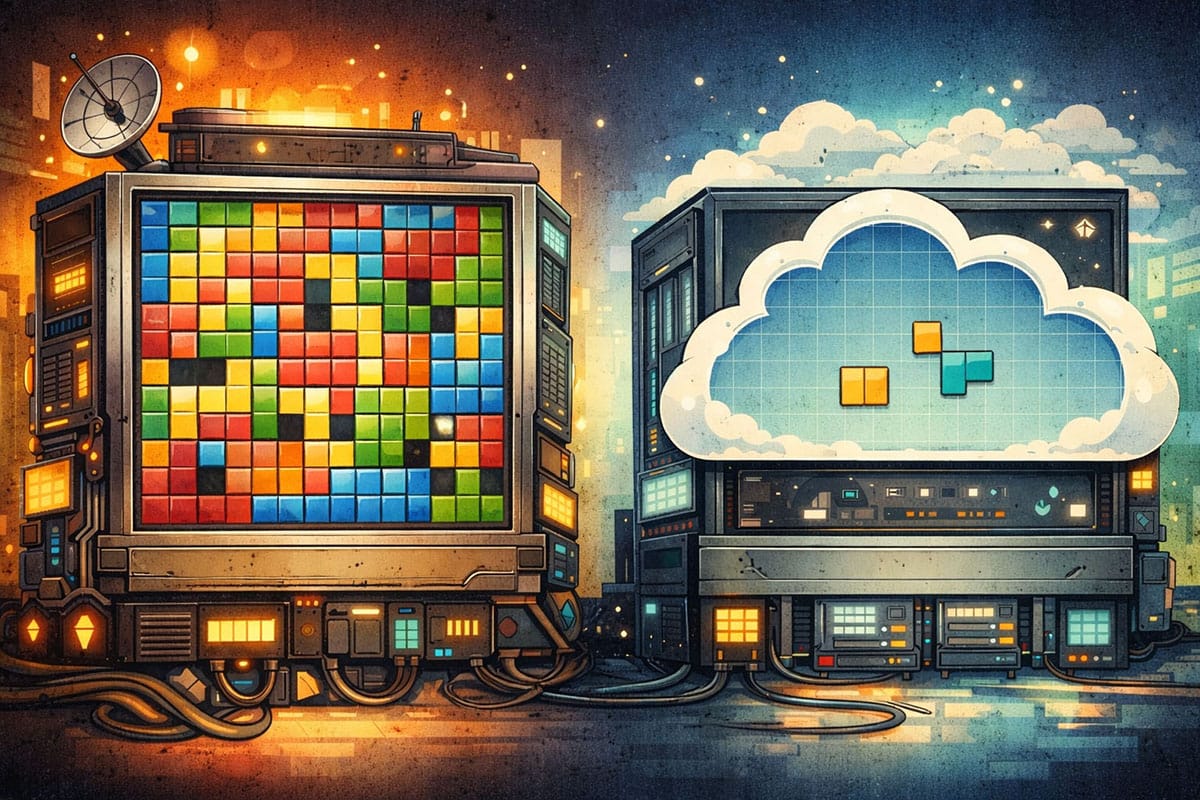

Part 5 of this mini series in improving utilisation and reducing costs looks at defragmenting elastic capacity.

The previous two articles in this series covered supply elasticity and workload fragmentation. Naturally putting the two of these together presents a fun little problem the likes to hike unit cost of compute like a central banker pumping interest rates in 2022.

So far in this mini-series, I have omitted talking about the length of each job, unfortunately this is a common source of workload fragmentation. Typically, your HPC scheduler has no idea how long any given job will run for. When using elastic capacity this can have the unfortunate side effect of leaving long running jobs on your temporary (i.e. additional marginal cost) capacity.

The problem can also be far worse than first appears from looking at the picture. The simplified diagram implies a small number of jobs can be moved on premises, what is doesn’t show is how many VMs are running to serve the additional capacity. Even if jobs were perfectly optimised and we had 100% utilisation (of the elastic cloud capacity) at the start of the run, as jobs complete this will tail off. That’s normal. Depending on the run times of your jobs, or more precisely the variation in runtimes, and how distributed they were this can mean several VMs running to serve a workload that would comfortably on one machine. Possibly one machine in your fixed capacity pool.

A high impact example of this could be GPU workload with job length variations from minutes for some jobs to hours for others (we’ve seen this in the past). In such a case you could end up running several high cost multi GPU VMs with each one running only a single job consuming one (or even a fraction) of a GPU. For workload that at the tail end of your batch would not only fit on a single machine but also on premises where you have no marginal cost of compute.

There are two ways in which this can be solved. Firstly, increased consistency in job times. If you’re running a single workload this is usually not too bad, and generally just a workload splitting problem that needs to be solved to create more consistent job lengths. If you’re running multiple workloads though this can become a little more tricky and it is not always possible (either technically or politically within your organisation). At that point you’re back to segregating capacity which introduces its own problems for utilisation rates. This solution effectively minimised the problem by having VMs free up most consistently by ensuring all jobs completed at similar times.

The second option is to move running workload. This can be done at an application level with jobs either checkpointing regularly or being signalled to checkpoint, killed and restarted on another node. Alternatively, if that isn’t feasible there are a number of solutions are now available that will persist the state from an operating system perspective and restart the job on another node without the application being aware of the move. (Apologies to those of you who are now having PTSD symptoms from dealing with vMotion on HPC nodes under your feet in the early days!). This method aims to allow the variation in jobs times but will defragment jobs onto a single host instead of multiple or back onto fixed (already paid for) capacity. A bit like your old HDD defragmentation that you ran religiously every week in a futile attempt to keep your windows NT machine running with some semblance of usability.

The effect of these techniques really depends on how big a problem this is in your own environment but for workloads with long tails it can have an enormous impact.