Quantum #90

Issue #90 of the weekly HPC newsletter by HMx Labs. A little light on the HPC this week but if you like getting worked up over FP64 support or small form factor devices to run LLM inferencing locally you’ll be happy.

I tend to keep the focus of this weekly update on HPC (with a side of its application in finance). I view AI as a HPC workload and AI infrastructure is pretty much just another supercomputer, but I figure there’s already so much other AI news that it doesn’t need me repeating any of it. Some weeks though it’s hard to find any HPC news that isn’t AI related! I don’t doubt that it’s out there, I just suspect that it’s not getting any airtime or column inches. You know where to find me if you have something I should include here…

For those of us bemoaning the loss, or at least the stagnation, of FP64 in Nvidia’s latest GPUs they’ve proudly given us a new version of cuBLAS which will emulate FP64 on lower precision data types in Nvidia’s latest GPUs including the B300. I’ve heard claims that this provides performance that is as good as hardware FP64 support but I’m quite curious to see what it really looks like. I guess we should have written a few GPU specific benchmarks shouldn’t we. I’ll add it to the list. Don’t hold your breath though, it’s getting to be a long list!

Also, I really wish everyone would stop calling small form factor devices designed to run LLMs locally supercomputers. They really aren’t. Please stop. (But yes, I do want one of each).

In The News

Updates from the big three clouds on all things HPC, such as they are this week.

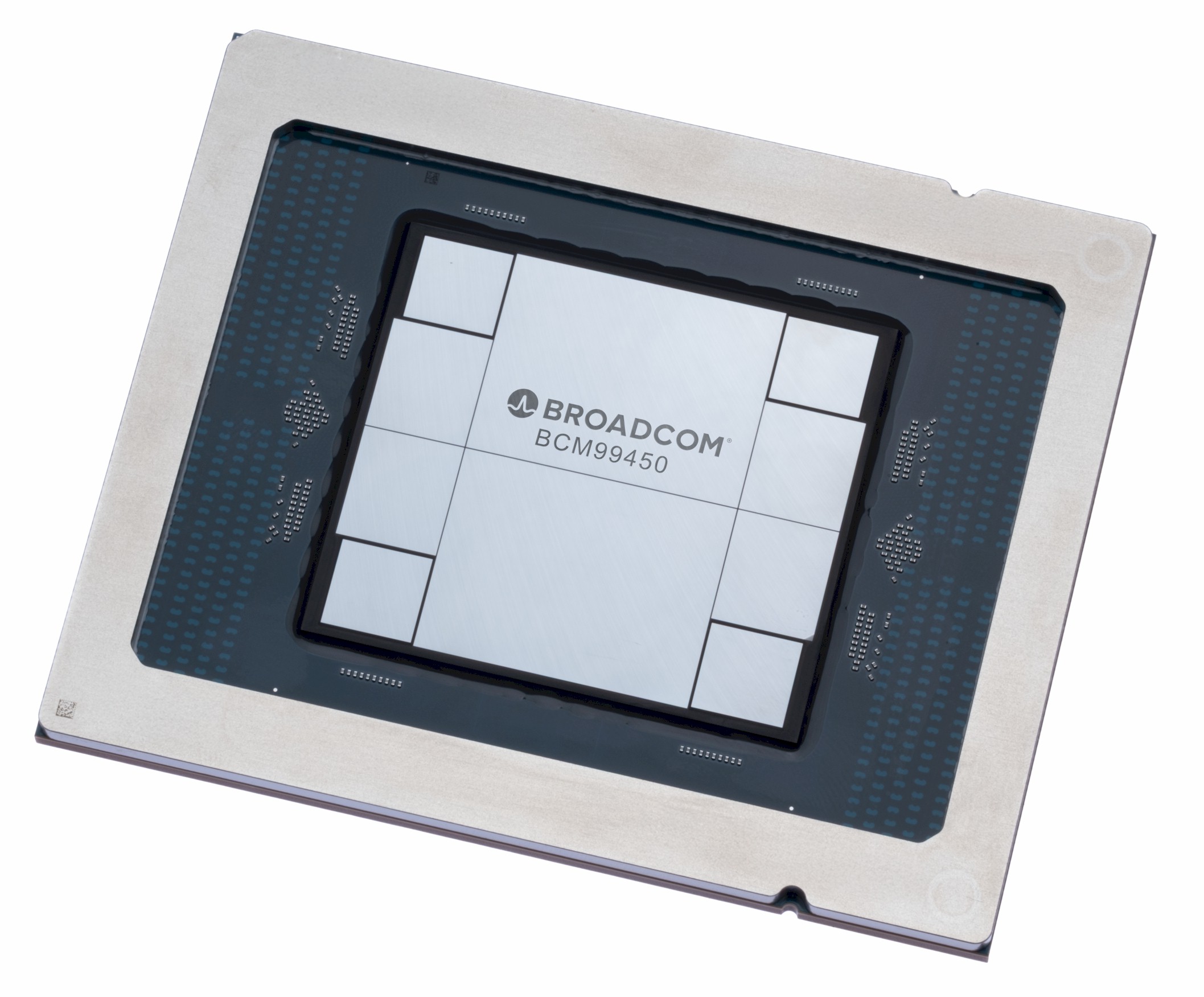

Some opinion but also a lot of good context on Broadcom’s recent missed earnings

Whilst I vehemently disagree that this is a supercomputer, it is nonetheless very interesting. Local LLM execution at 120 billion parameter scale… sounds very promising

Nvidia talks up cuBLAS to emulate FP64 performance to make up for its lack lustre native performance in the B300

https://www.hpcwire.com/2025/12/09/nvidia-says-its-not-abandoning-64-bit-computing/

In a surprise to precisely no one, server sales in Q3 2025 were up. A nice 61%. I am a little surprised though that storage sales were up only 2%

From HMx Labs

Continuing from last week’s discussions based on my talk at Quant Minds

Let’s see how good my crystal ball really is. Spoiler alert it wasn’t amazing but 3 out of 5 isn’t too bad

Know someone else who might like to read this newsletter? Forward this on to them or even better, ask them to sign up here: https://cloudhpc.news