Schizophrenic AI

Recently I have been both incredibly impressed with AI and equally astounded by its uselessness.

Last month we released a paper about optimising HPC workloads in finance. This isn’t about that paper. This is about the video Google NotebookLM created by “reading” that paper. And you can win some HPC Club merch as a bonus.

Honestly, I’m impressed. Not long ago that I was using chatbot driven models to create images to accompany my musings. The output was rudimentary but just about acceptable for LinkedIn or blog posts. Just.

While I’m certainly no video editor by profession I have in the past created educational training videos for clients and that was a time consuming (and therefore expensive) endeavour. I have some understanding of the effort level required for me to create a video such as this manually.

The total effort to produce this video was uploading the original PDF and hitting the video button. So about 30 seconds. It would have taken me days.

But, there’s always a but isn’t there, it does have some mistakes. It was fairly easy for me to spot them because I wrote a lot of that paper and have read all of it multiple times. Actually, even then I missed one that @Callum Ward (one of the other authors) caught.

Here’s the fun bit; how many can you find? Want to win some HPC Club merch? A hoodie? A T shirt? Stickers? One free to first person that finds each error. Mostly the errors are trivial, but there is one significant error. I won’t give it away just yet. Let’s see if you find it.

There’re two problems here. Firstly, the obvious one, if I were to use NotebookLM as a shortcut to learn I can’t be confident that its actually presenting the original content factually. Secondly, I have no way to correct the errors without requesting a complete regeneration of the whole video, which may then result in other errors.

As a professional workflow that’s no good. Human created content would have been editable. Even if it used AI voice over or AI created elements, fixing small errors would be relatively quick to do. Whilst highly impressive, this video could never be delivered to a client with these errors or even published alongside the original paper.

I don’t need the video output to be perfect if it can give me, alongside the fully rendered video, a “project” output of some form that allows me to correct the errors by changing the text the narrator “reads”, edit the stills and video content in my usual tools (Final Cut/ Premiere etc) but I suspect that would actually mean creating the video in the a completely different way to start with.

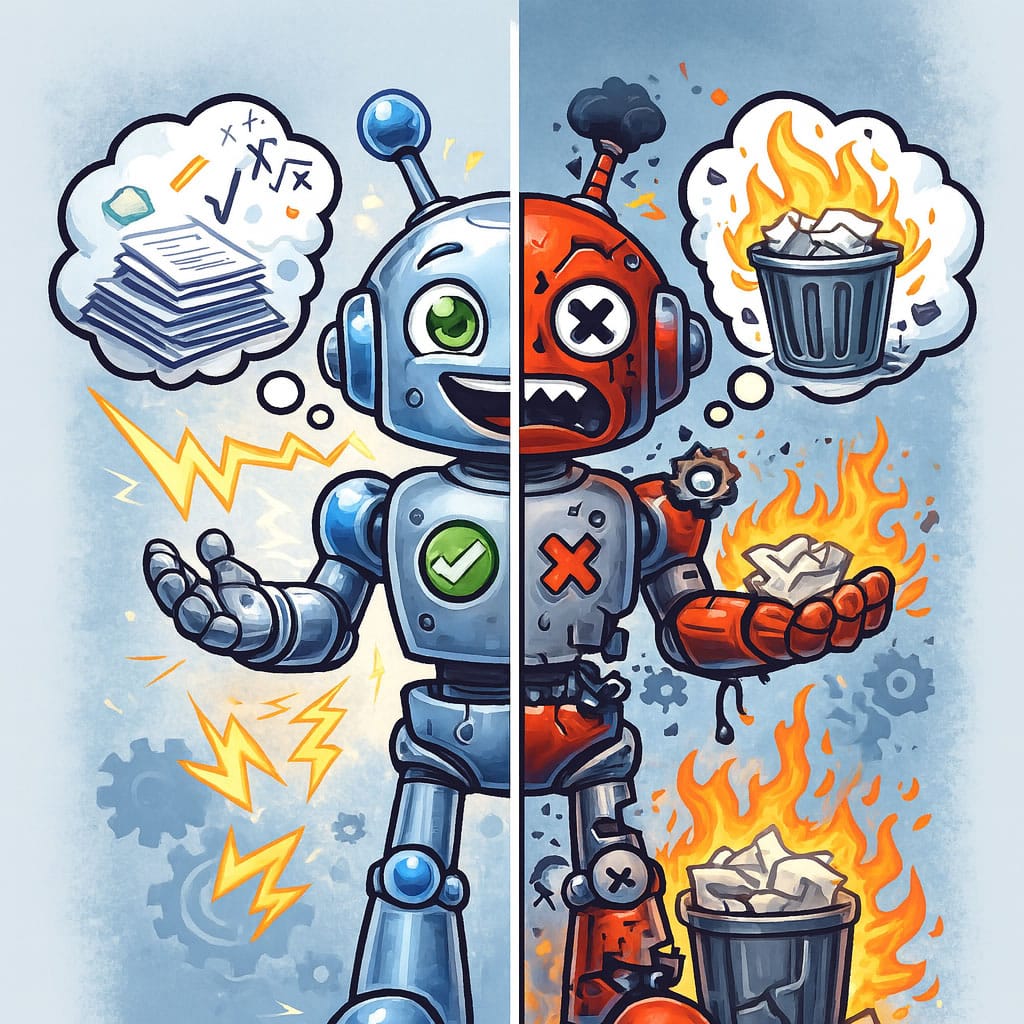

My other big problem with AI now is the huge variability of the output. I can one shot a video like this which I am quite frankly somewhat astounded by, but at the same time it can’t create a relatively simply image without getting into a wrestling match and having to swear at it. I’m not even kidding either .

The output from NotebookLM is deeply impressive. I was not expecting that. Which makes it all the harder to reconcile with the complete garbage it will strew minutes later.

Yes, I know the image generation example I provided uses a different model to the NotebookLM video but I’ve had enough similar experiences with Gemini/ Nano Banana to know it’s not an OpenAI specific problem.