What’s in a HPC Scheduler

Are HPC schedulers still fit for purpose? Are we building monoliths with high levels of lock in? Do we have the interoperability we need?

A good scheduler may well be the cornerstone of HPC but it’s about time we realised it’s not the only foundation we need. Heck let’s be honest shall we, most HPC isn’t even compute constrained anymore. That’s a dirty little secret we tend to keep to ourselves, and whisper in hushed voices when sipping coffee around the liquid cooled supercomputer.

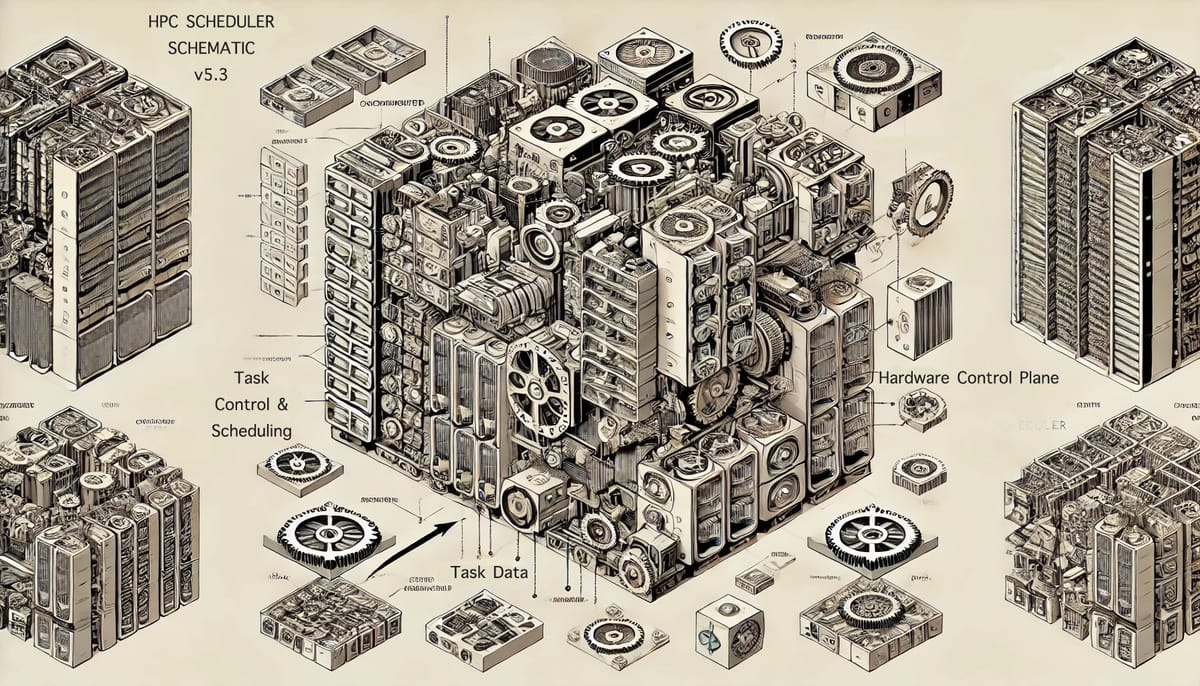

Isn’t it time we acknowledged it. Isn’t it time we admitted that a data plane is just as important. A node, rack, zone and region aware data plane. And a hardware control plane. That works for cloud, on prem or colo.

But critically I don’t want my data plane to be part of my scheduler. Or my hardware control. No, I want an open standard to allow them to communicate.

As anyone that has attempted to swap schedulers in a HPC system will tell you, the API changes are trivial. The problems come because the choice of scheduler inevitably leaves its mark on the architecture of the rest of the system. The mark of the beast. Can we be done with that already?

I want my scheduler to schedule and be bloody good it at but do no more. I want it to talk to my data plane to figure out where data is to make those scheduling decisions. I want it to talk to my hardware control plane to spin up/down compute as needed.

If we ever have a hope of using ML/AI in HPC infrastructure we need to start by exposing the data needed to train it (more on this the linked article below).

This is an excerpt taken from: