How Good is GPT o1 really?

I pitted OpenAi’s latest and greatest o1 model against little ol me. Guess who won.

Open AI ChatGTP o1: 0 - Hamza: 1

Relax your job is still safe.

I’ve been using a paid subscription to OpenAI’s ChatGPT for some time now, you may have noticed the AI generated images that often accompany my ramblings. That’s not all I use it for though and with the release of the o1 model I was curious to see how much better, if at all, its gotten.

You see dear readers; you have been an unwitting participant in a little experiment by myself this week. Human experimentation! How very Sheldonesque of me, making ol Sam Altman look good right here. I’m sorry. I promise no humans or AIs were hurt in the writing of this post 😏

Whilst I’ve been using ChatGPT to generate images, and sometimes code for quite a while, till this week I had never once posted an AI written article. Not for any moral or ethical reasons. No. It’s far simpler than that. For my domain at least (HPC) I always found them to be well… crap.

But perhaps that was just my own ego. So pitted Sam Altman’s latest and greatest model against my weakest skill, writing posts like this. (I’m pretty sure I write better code than I write blog posts). Earlier this week I picked a topic that has never gained much traction, and I wrote another post on it. I asked ChatGPT to write one too (same topic), asked it nicely to refine it, then to make it fit in LinkedIn’s word limit. Sheesh for a so called intelligence it doesn’t half need a lot of direction and coaxing.

I then generated two very similar images to go with both and instead of my usual single post I scheduled two to go live at the same time. For those of you that engaged with both (there were a small number) and were wondering if I’d lost the plot, well now you know.

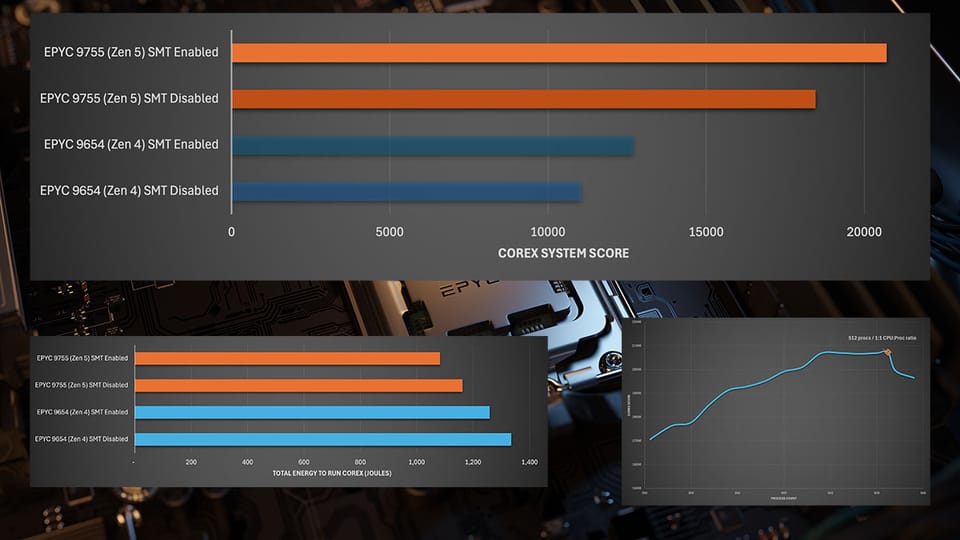

The results after 2 days.

ChatGPT: 219 impressions, 5 likes and 2 reposts, 0 comments

Me: 885 impressions, 17 likes, 7 comments.

Suck it ChatGPT. The reposts on the ChatGPT version? From HMx Labs employees (they didn’t know about the experiment either)

Which one did you prefer and why?

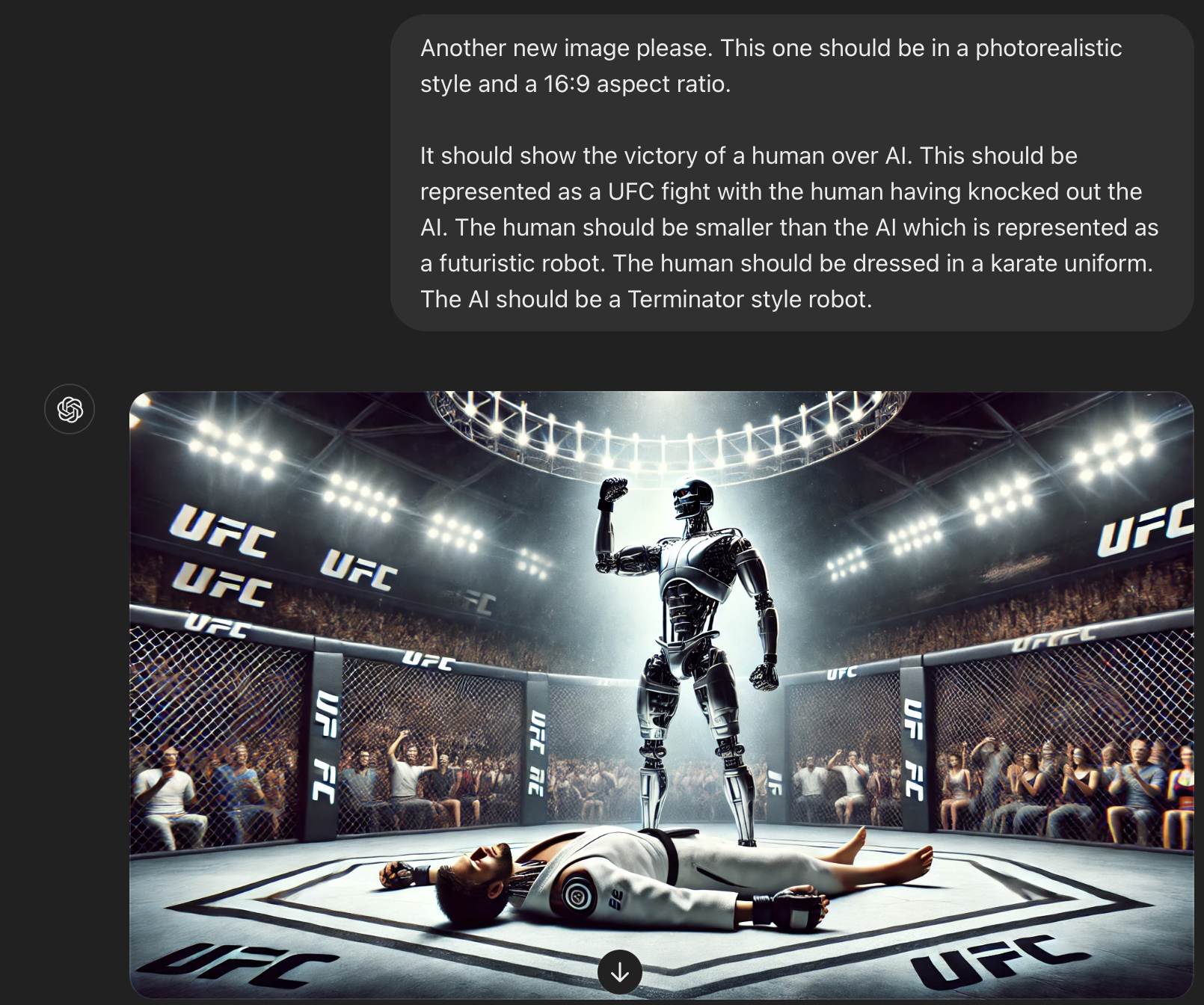

EDIT: I went to generate the image to go with this post after having written it. This was the first version it gave me 😆

This is a great example of how ChatGPT has no understanding of the words that it is fed. All of the words are in the image but it swapped the victor. I come across this sort of thing surprisingly often.

Now I could start projecting and anthropomorphising ChatGPT and saying it doesn’t like to lose but that would ascribe consciousness and intent to a machine that has neither. It’s just dumb. The old adage of “do not ascribe to malice that which can be explained by incompetence” applies equally well to AI.