HPC Glossary

A glossary of (HPC) terms as used in our recent articles to ensure clarity and a shared understanding. These are largely well understood; however, there can sometimes be a little confusion, especially around the common usage of the words core and CPU.

Socket / Physical CPU

Historically, this is a physical socket providing a mechanical and electrical connection between a circuit board and a physical CPU module. Each socket would have inserted within it a single processor.

For the sake of clarity, particularly in the context of HPC, a socket is generally understood to mean a single physical processing unit. Whilst in the context of servers this usually still also means physical sockets and processors, in the context of laptops, mobiles and other small form factor devices (such as a Raspberry Pi) there may no longer be a physical socket with the processor being directly soldered to the circuit board instead. However, to avoid confusion with the term (logical) CPU (see below), the term socket is used to refer to the physical processor.

In virtualised hardware, the number of sockets presented to a guest virtual machine does not have to correspond to the number of sockets present on the host hardware. A hypervisor may present any number of virtualised sockets to a virtual machine.

Core

Modern processor architectures usually have multiple physical processing units or cores on each physical CPU (or socket, as defined above). A core is a physical portion of a socket. Whilst in HPC (and most server) environments, cores are generally homogenous (i.e. all cores on a processor are the same), in many consumer grade devices cores are heterogenous (such as most smart phones or Apple silicon-based computers).

In virtualised environments, a core may not always correspond to a physical core on the underlying hardware. It is possible for a hypervisor to present a logical CPU (as per the definition below or a thread) as a virtualised core.

Logical CPU

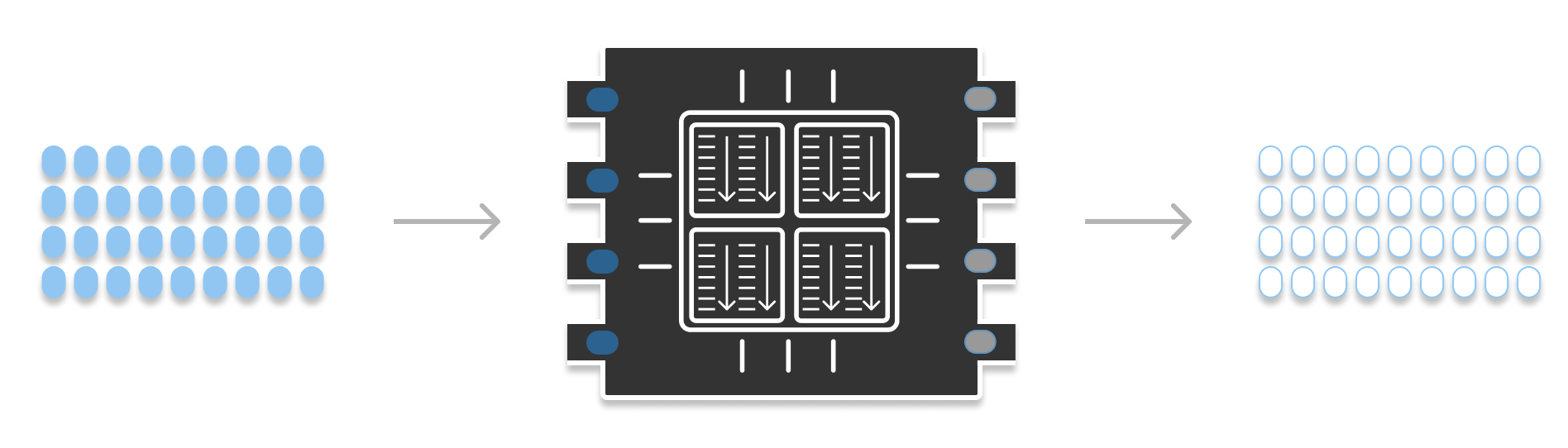

Any given core may run one or more (in reality almost always two) parallel threads of execution under what is known as Simultaneous Multithreading (SMT) or commonly referred to by the Intel brand name of Hyperthreading,

Each thread of execution is not a physical component on the processor die, but rather the simultaneous use of the same core by multiple processes or application threads.

In virtualised environments, a thread may be presented to the guest virtual machine as either core or a thread, i.e. it is possible for a hypervisor to expose a single core with SMT enabled as either one core with two threads or as two cores with one thread each.

The more commonly used and accepted term for threads is (logical) CPU, often termed a vCPU in virtualised hardware. Whilst this naming is somewhat at odds with the historical physical CPU module, it does fit with what is presented as a single logical CPU to the operating system. As such, tools such as lscpu and cloud providers both (and, therefore, these articles) use the term CPU to mean logical CPUs rather than physical CPU modules.

The total number of CPUs a machine has is, therefore, equal to:

Number of sockets x number of cores per socket x number of threads per core

Or put another way, when you build your next gaming machine, you won’t be buying 128 CPUs from Amazon, rather you will buy one physical CPU that contains within it 128 logical CPUs.

Task

HPC systems are generally composed of application code that can run discrete units of work. Many instances of this application are then used to distribute the workload across many CPUs (across many machines and sometimes even data centres).

Each instance of the application executing, with its set of input parameters, is referred to as a task.

Slot

Whilst the terms socket, core and CPU are not HPC specific, a slot is a HPC construct and, unlike the previous definitions, is not something that is defined by the hardware. Instead it is a value that is often set by the HPC system, most commonly by the scheduler. Slot may also be a somewhat IBM/Spectrum specific term; however, it is what we have chosen to use in this series of articles.

Not all software that runs on compute grids is perfectly single threaded and able to use 100% of a CPU. The software may be multithreaded (not ideal but quite common) and require the use of more than a single CPU. It may be single threaded but consume only a fraction of a CPU (usually older systems running on more modern hardware). In both cases, to optimise utilisation, the scheduler is able to define how many instances of the binary (or tasks) should run on a machine. In other words, it is possible to define that, on a 8 CPU machine, 4 slots (concurrent tasks) should be run as each one will use two CPUs (i.e. a multi-threaded application). Or, alternatively, that 16 slots should be run as each is only capable of consuming 50% of a CPU. Each instance of the process is assigned to a slot.

The ratio of slots to CPUs is usually referred to as the slot ratio.

Measuring Compute Size

Machine Hours

A unit of measure is based on the length of time (in hours) of a (virtual or physical) machine is running for. These measures have limited utility in the HPC world except in very homogenous environments due to the large variation in capabilities of a machine.

It is, however, quite important to measure, as in cloud environments virtual machines are charged for in terms of the number or hours they are “switched on” (regardless of how many vCPUs you have enabled/ disabled or have SMT enabled or not).

Core Hours

A unit of measure is based on the length of time (in hours) of a single core within a machine. This is a commonly used measure in on-premises HPC environments where the hardware is generally uniform (SMT or on off across the whole cluster) and core hour numbers are therefore, comparable across different sizes of workloads.

Importantly, it is a measure of the size of a workload. A workload that completes in four hours on a cluster of 200 machines with 8 cores each (i.e. 6,400 core hours) is the same compute requirement as a workload that completes on 400 machines with 8 cores each in two hours.

CPU Hours

These are much like core hours but instead of measuring the number of cores, it involves measuring the number of CPUs (see above for the difference). This becomes particularly relevant if the environment is heterogenous in respect to SMT (Hyperthreading) being enabled. Using core hours in such an environment would result in potentially being off by a factor of two when comparing environments with SMT enabled and disabled.

Slot Hours

Unlike the above metrics, which are generally geared towards hardware centric use cases such as costs or optimisation (of cost or performance), HPC applications may use the slot hours to reflect the total workload processed. As the name implies, this measures the number of hours per slot.

Hours On vs Used

With all the above measures of capacity or size, there are in fact two possible measurements. Hours on and hours used. For the sake of simplicity, the following is limited to CPU hours but applies equally to core or machine hours.

CPU hours on is a measure of the time that the CPU (and really this just means all CPUs in the machine) is switched on. For on-premises based clusters this is just 24 hours a day for 7 days a week (unless machines are powered down and this is correctly measured). For cloud infrastructure, this is measured as the billable time for the machine (which may be slightly different to the timing that that OS is booted and available to user processes).

CPU hours used, as the name implies, is a measurement of the time a CPU is in use by HPC workload. Naturally, in this case, it is possible for a subset of the CPUs within a machine to be in use. The metric is important (both on-prem and on cloud) as it enables calculations of grid efficiency and identify periods low utilisation.