Thoughts on AI for 2025

I am in the fun position not only of using AI as engineer but also need to making hiring decisions in the face of tools such as Cursor and Devin. I thought I'd share some thoughts.

We’re driving off for a skiing trip soon (actually by the time I publish this we’re probably already back from it). As my daughters get older, they want to be more involved in the planning process of our holidays too. As such my eldest, excited about the fact we may do the trip in a camping van this time, wanted to plan what she could do on the 12 hour drive to the Alps.

I obliged by handling her an iPad and suggesting some searches to start with in Google. She came back a few minutes later complaining that she can’t find anything. Sound familiar? I proposed we ask ChatGPT instead. This provided a much more satisfactory response. The information she was looking for was provided without clutter or spurious unrelated adverts (which even at 9 years old she is adept at ignoring).

This probably says as much about the decline of a once powerful search engine as it does about AI. After all, if an LLM trained on the internet provided the answer the raw data was out there somewhere.

Generative AI is very much with us and there is not putting the genie back in the bottle so I have some thoughts to share. This isn’t a topic I’m any kind of expert on, but I am in the fun position not only of using it as engineer but also as the CEO of HMx Labs making hiring decisions in the face of tools such as Cursor and Devin.

Developer Supply Chain

Riddle me this batman, if no one will hire junior developers any more where will our senior developers come from in 5 years time?

Its January 2025 and tech jobs are still in the doldrums. Junior developers are told by everyone not to use AI or they won’t learn. Senior developers are benefitting from a significant productivity boost by using AI.

Early in 2024 I wrote that whilst AI might not take your job it will certainly affect the job market for software developers, and junior developers in particular. I think it’s also still true that if you care about learning something new, you shouldn’t use AI to just do it for you. You won’t learn anything.

The problem is, it is also true that as a senior developer using tools such as GitHub Copilot, Cursor or Windsurf results in increased productivity. There are many (flawed) studies (adverts?) out there claiming various levels of productivity increases by using AI. I won’t try and put a number on it, but between those studies, anecdata, and personal experience I’m pretty sure there is a decent performance improvement.

If I have a team of 5 software engineers working without AI and I need more engineering capacity, do I hire a junior dev? Or do I just pay $10 a month for a Copilot subscription for each of them? Or $500 a month for Devin. As someone running a business as well as writing code that’s a very real choice.

There’s a serious problem here though. What happens when my senior engineers retire or decide to go and live off grid and farm goats instead? Where am I going to find new senior devs if no one hired and trained any?

So we do. As well as using AI we continue to hire less experienced engineers and invest in training them. That leaves us open to another problem though. Retention. If your freshly skilled senior developers keep leaving you may be performing an altruistic function in the software engineering ecosystem but you still have the same problem.

Who Trains Future AI?

It’s no secret that OpenAI used the contents of Stack Overflow to train ChatGPT. Whether or not traffic to Stack Overflow crashed subsequently seems to be a little contested (Stack Overflow claim it hasn’t, other sources claim rather significant drops)

One thing’s for sure though, if its quicker and easier to get the answer you need right in your IDE (such as Cursor) than search on Stack Overflow and wade through the toxic sludge to find the an answer that is still up to date and works then you’re likely to stay in your IDE.

Now, I’ll admit I still use SO. Mostly because my default position is never to trust the answer produced by an LLM (so it’s not quicker if I have check anyway) but I suspect I am in a small minority. What happens when most people don’t use SO. Where will the training data for new technologies come from?

Maybe there won’t be any? Satya Nadella is of the opinion that SaaS applications are just a wrapper around a database that will be replaced by AI generated code. Perhaps in a future world we no longer have any new libraries or frameworks. Or languages. Maybe we just build more and more AI generated code.

We are seeing increased adoption of languages and frameworks not necessarily based on their capabilities but on how well AI tools are able to generate code for them (which in turn will be a function of the quantity of existing training data in the public domain).

Will this be how innovation dies?

Code is a Liability

Any accountant or investor will tell you software is an asset. Any developer will tell you that code is a liability. Maintaining it is expensive. We try and write as little of it as possible. On purpose.

AI on the other hand does no such thing. Not only is it able to crank out lines of code faster even than the mythical 100x developer it is also very happy to do so with little thought given to code reuse, common libraries or frameworks. Especially if those libraries and frameworks happen to be private.

While there have been improvement in this area over the last year, the sheer concept of generating entire applications using LLMs or other generative AI tools flies in the face of code reuse. This is especially true of enterprise applications which will often be composed of numerous internal (private) libraries. Perhaps we will see advancements in identification of duplicated code by LLMs and extraction of that into shared libraries that then become part of its training set to be used in generating future code? We can only hope.

Measuring Software

An early study in 2023 by GitClear showed an increase in code churn since the advent of Github Copilot.

In the study this is presented as a bad outcome. But we’re judging that outcome based on the standards and expectations we have of code quality when code is written by humans. I’m not sure that will necessarily be true for AI written code.

Let me give you an example. Imaging you have an API or utility library. Let’s pretend that the engineering team behind it has a test suite that fully tests the public API including all possible edge cases and performance criteria. (How plausible this seems to you will really depend on your background and employment history!). In such a scenario, if you requested a change to the implementation and the AI agent completely rewrote large sections of it, would you care?

If humans no longer look at the implementation but simply have effective means of judging the output, does it matter if the implementation changes completely every time an AI agent works on it? In fact, do we even version control it in the same way? Do we treat future AI generated code the way we treat old school code generation tools where we do not edit that code by hand?

I think we are rather unprepared for a world in which more code might be written by AI than by humans. Many of our software engineering practices won’t really hold any longer and we have not even started to adapt these.

The most important of these, and the one we have struggled with greatly so far, is judging the quality and acceptability of software. Perhaps AI will finally force us to solve this? Probably not. Will we focus instead of defining more clearly the parameters of the problem, something we tend to struggle with today as in industry (see the rise and fall of agile). Certainly, any kind of AI agent will benefit from clearly defined requirements. Perhaps if are also able to judge its output by those same requirements we no longer care about the implementation?

I believe if we are to migrate from AI working simply as copilots and assistants to being fully fledged engineer replacements (as Devin claims to be) then we also need to change the paradigm under which they operate. This is especially true if we expect such AI software engineers to be used by non-engineers.

After all, when you hire a motor mechanic to repair your car, you really only look at the outcome. (Unless you’re like me and can do the work yourself and check).

I don’t know how much further LLM based code generation is able to develop. Perhaps we’ve peaked, perhaps we’re too power limited to progress. Or perhaps we’ve barely scratched the surface. One thing is for sure though, the way we manage code and asses it will have to change. Quickly.

March to Homogeneity & Mediocrity?

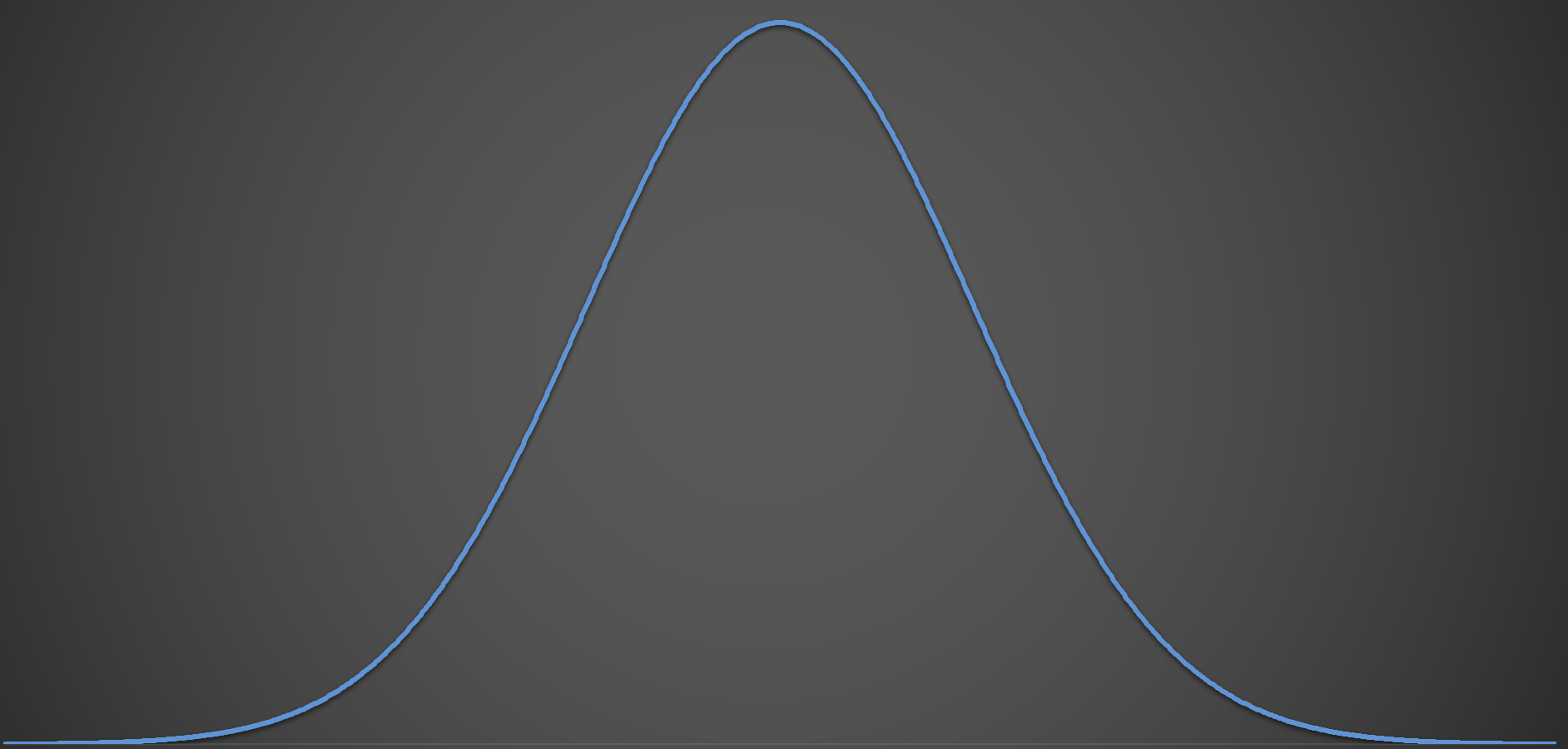

If the distribution of human ability in a particular skill, say writing code or drawing pictures, looks like this

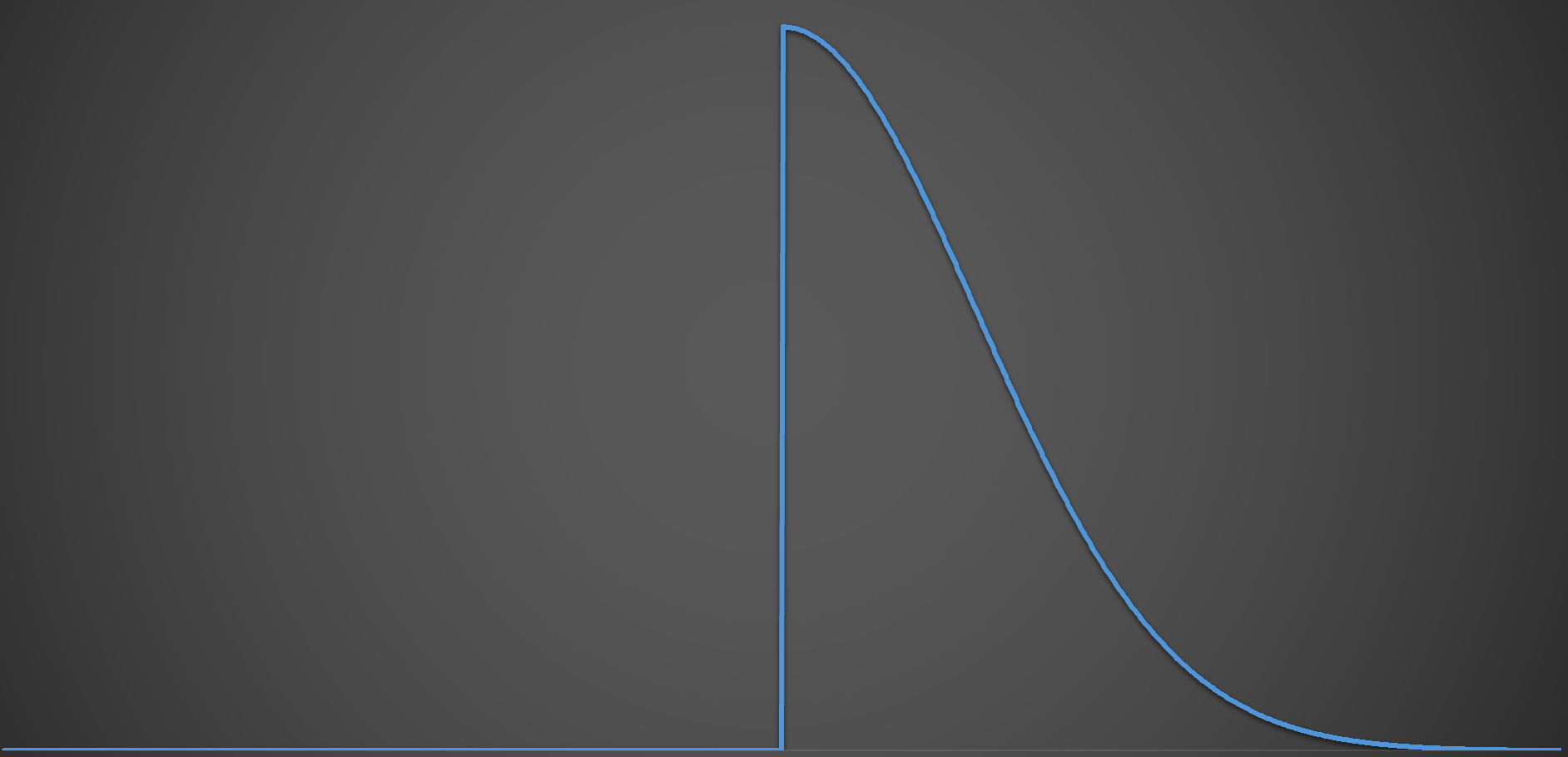

What happens when GenAI becomes sufficiently ubiquitous that anyone is able to use it (are we there already)? Assuming (and to my mind this remains an assumption, for now a least) that the GenAI is at least as good as “average” human, does this mean we lose the first half of the bell curve? Does a gen-AI assisted human skill distribution look like this:

This may initially seem like a great result. With the mere boiling away of the oceans we have enabled half the population to become better. I think though, the impact doesn’t stop there. If becoming “good” at a skill previously would have taken hours of learning and practice and ge-AI enables that with little to no effort, where is the incentive to put in those hours?

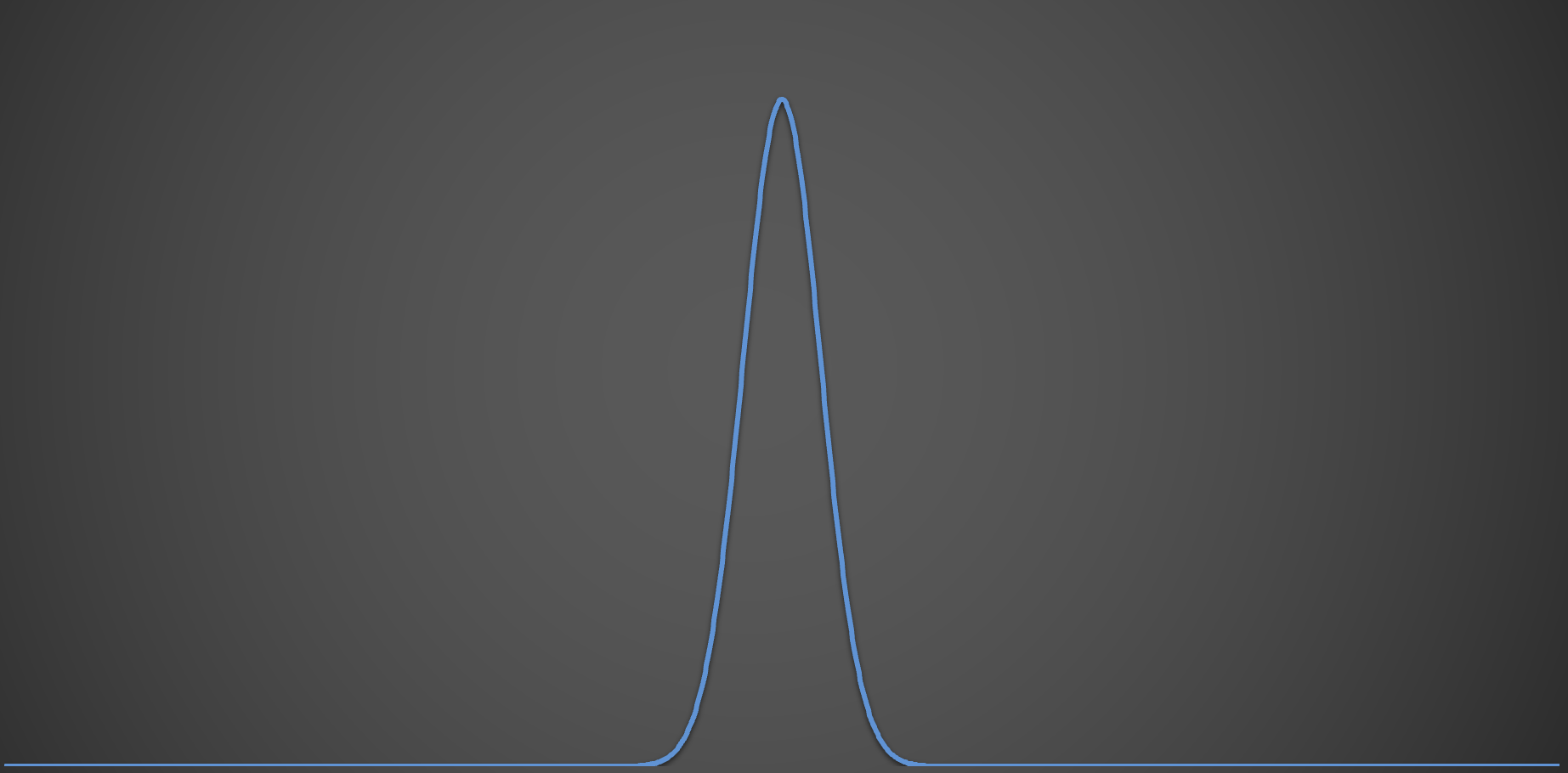

This will result in fewer people making that effort? And with that what we will see is fewer people who are better than gen-AI? Will the distribution in skill in fact start to look like this:

Given that recent research shows that LLM output remains derivative, this has significant potential impact on our ability to continue to innovate.

Coupled with a lack in incoming younger talent, are we simply paving the way for our own mediocrity?

What now?

If you’re reading this hoping for guidance, I’m afraid I don’t have any concrete answer for you. As I’ve said in the past, my crystal ball is not better than yours. In fact, I’m pretty sure it’s worse.

We have however seen this big tech playbook before. VC funded startups get us all hooked using the VC’s cash to foot the bills. Like a ketamine dealer giving you the first hit for free. What are the odds that it costs Microsoft more than $10 per month per user to operate GitHub Copilot? OpenAI CEO Sam Altman has already stated that even their most expensive tier at $200/month is losing money. At some point both big tech and VCs will want to see a return on their billions in investment. As we know all too well from our now subscription defined lives, when this happens the cost of your AI service will increase.

We aren’t about to the put the genie back in the bottle so doing your ostrich impression is probably not the best idea. At the same time though the real costs (both monetary and societal) of using gen-AI will not be apparent for some time. I’m not sure I’d bet the house on it just yet either. If SaaS companies that have adopted AI already aren’t happy with their reduced profitability just wait till the VCs and big tech companies start trying to cash in on their investments.

If you are a business using AI as a service, be sure that you are able to either absorb or pass on the inevitable price hikes. Or are willing to go back to operating without it.