HPC on Cloud for Financial Risk — A Comparative Analysis

This article presents the results of running the COREx benchmark on over 650 different VM types across the three major cloud providers. It attempts to contextualize these results and help HPC practitioners interpret them to optimise their systems, as well as discuss the methodology employed.

Before delving into the results, it is important to bear in mind what they do, and perhaps more importantly, do not mean.

The relative performance of a cloud VM in crunching financial analytics is but one data point in the selection of your cloud infrastructure. A very important data point — but only one amongst many regardless. The COREx benchmark has been used for all data presented below.

Most critically, no consideration has been given to the number of VMs available of each type (in fact such data is close to impossible to find). The fastest single VM may be ten times faster than the next best, but if they are in short supply, then that is of little benefit to any HPC cluster worthy of the title.

Secondly, no consideration is given to the cost of each VM type. This will be a topic for a future article that concentrates on the performance adjusted pricing of each VM type.

The definition of CPU and other HPC terms is as per our glossary.

VM selection

Including every possible VM type offered by all three vendors was unfortunately out of reach (financially) for the purposes of these article. We have elected to include:

- All compute specific VM families

- All HPC specific VM families

- Most general purpose VM families

- Any VM families the cloud vendor felt should be included

- Any VM families our clients are using or have expressed an interest in.

This should be sufficiently comprehensive to cover all financial risk HPC use cases.

Test methodology

In all cases, the tests were run in a cloud vendor account created by and paid for by HMx Labs. The accounts are a standard business account that any individual or small business would be able to obtain by signing up via the web consoles.

Quota increases were requested to allow sufficient vCPUs to run the largest VM types. For Microsoft Azure, it was also necessary to obtain a paid support contract to be able to disable SMT (GCP and AWS allow SMT to be disabled without requiring a paid for support subscription). Google was the only company that required engaging with its sales team to obtain a sufficient quota to run all the required VMs. Beyond this, there are no additional privileges provided to any of the cloud vendor accounts.

The tests have been run in a European region, either as suggested by the cloud provider or where HMx Labs was able to obtain sufficient quota, or where the VM type was available. For the purposes of this article, all VM types from each cloud vendor were run in a single region (with the exception of AWS HPC instances and GCP ARM based models as they weren’t available in the region initially selected). The regions used were as follows:

- AWS: eu-west-1 (except HPC instances, eu-north-1)

- Azure: westeurope

- GCP: europe-west1 (except ARM instances, europe-west4)

For every VM, the test starts in an empty cloud environment and all necessary resources are provisioned (network, resource groups etc) in order to provision the VM. Once the VM is running and SSH connectivity established, COREx is launched. Upon completion and retrieval of the results all provisioned resourced (including any network, security policies, resource groups etc) are deleted to return the account to an empty state. This process was repeated a minimum of three times for each VM instance type.

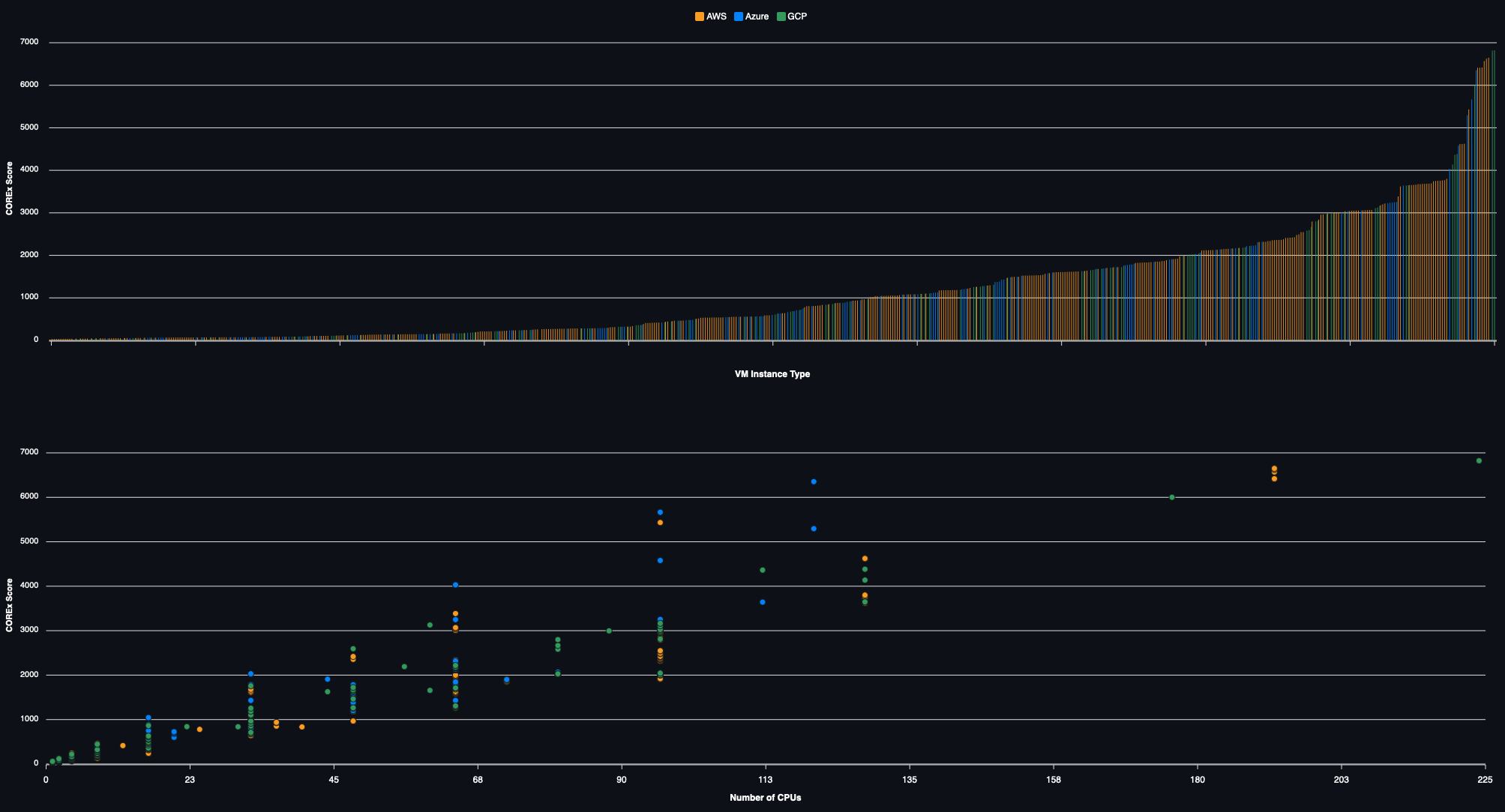

VM results by cloud

Somewhat as expected, results at a VM level, more than anything else, tell a story of an increasing number of vCPUs resulting in an increasing COREx score and a large variation in available performance.

The social media top trumps winner is the n2d-highcpu-224 (GCP) which, given that it is also the VM type with the highest CPU count, is not too surprising. The margin between this and the next fastest VM type is however smaller than expected based on CPU counts alone. It is also worth noting that we were not able to get our hands on any of Azure’s new HX or HB v4 families of VM SKUs (currently in preview). We have a suspicion these may change the results somewhat based on the performance of their current HB family. GCPs new C3 series (also in preview) has been included.

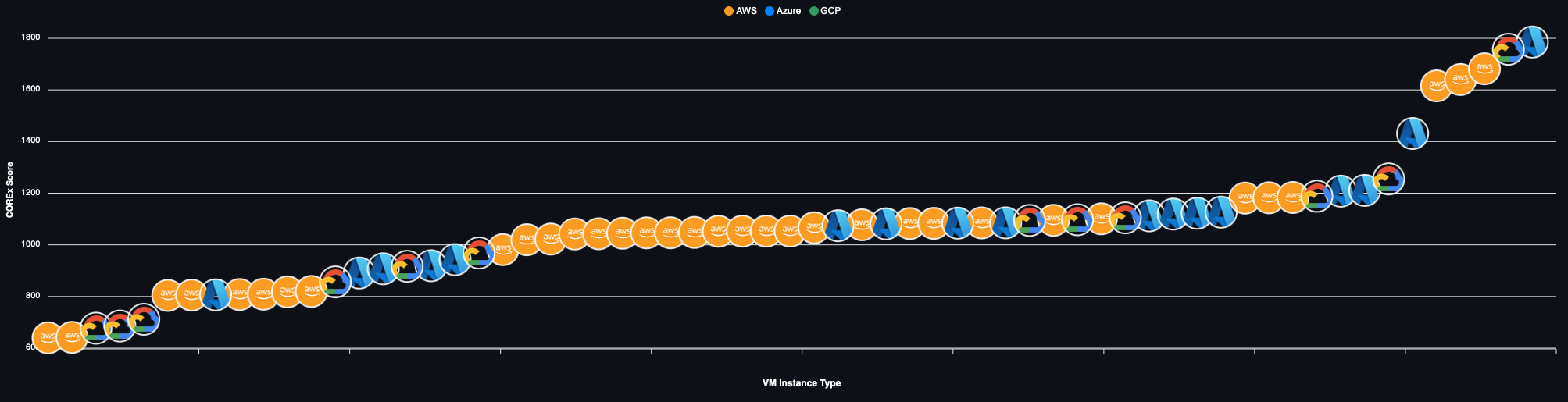

CPU results by cloud

Naturally, the next step is to analyse the results per CPU. Looking at the results in terms of mean COREx score per CPU yields the following

There is clearly a large variation (a factor of three) between the slowest and fastest VM CPUs (which exceeds the differences in performance one would expect across the three generations of hardware that may be used).

Delving further, if we limit our data set to 32 CPU machines, we see that same variation in performance repeated. Note the change in scale on the Y axis.

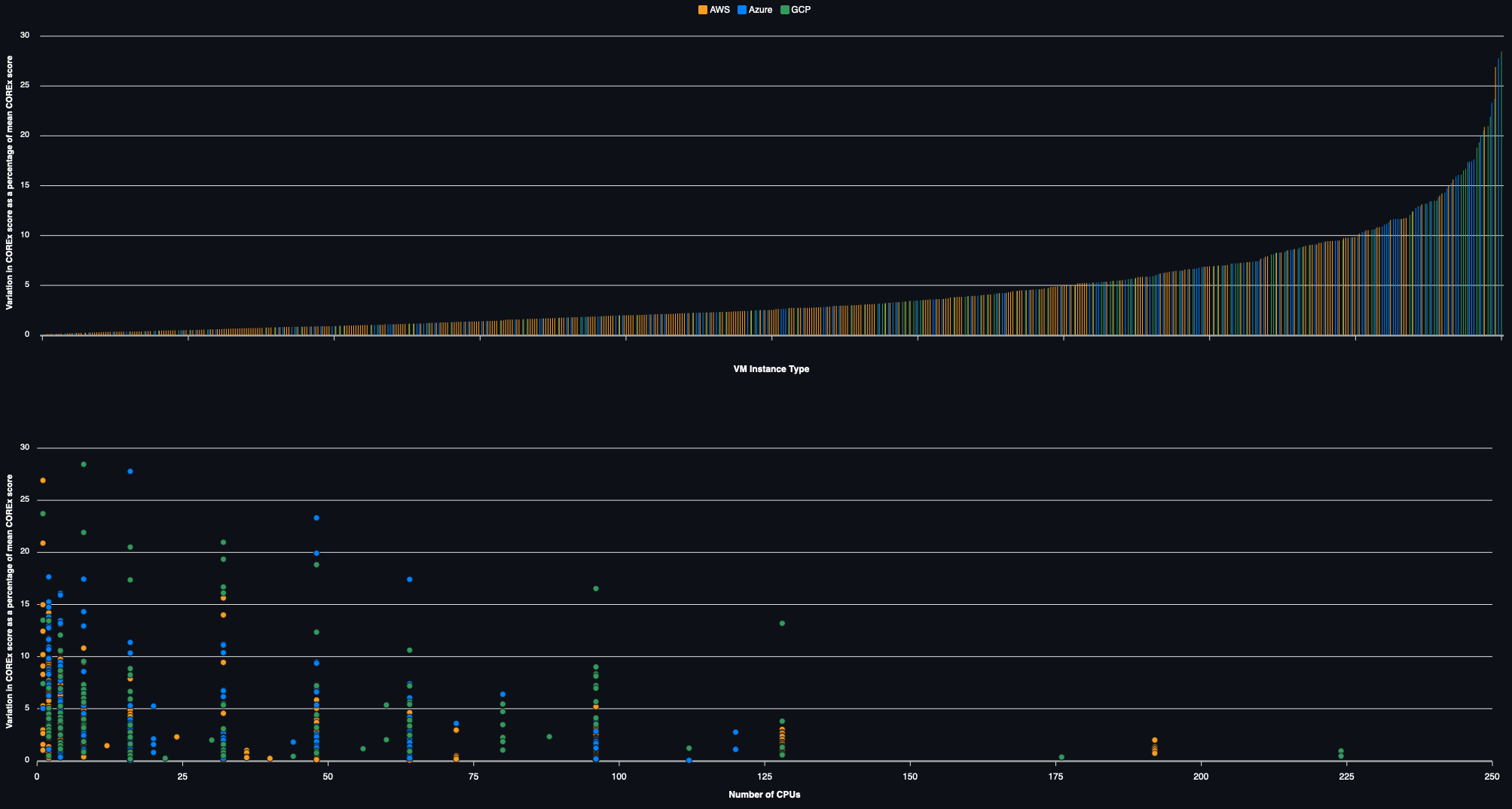

Consistency

“In the realm of high-performance computing in the cloud, two virtual machines emerged: ‘Blazing Bolt’ with unmatched speed and ‘Steadfast Sentinel’ with unwavering consistency. Researchers were captivated by ‘Blazing Bolt’s’ impressive speed, but soon realized its sporadic performance made it unreliable. Meanwhile, ‘Steadfast Sentinel’ may not have been the fastest, yet its consistent performance became the foundation of their work, enabling reliable progress. Ultimately, they discovered that in the realm of HPC in the cloud, true power lies not just in speed but in the steadfast consistency that ensures uninterrupted achievement.” — Chat GPT when asked about performance vs consistency in HPC

As even Chat GPT knows, consistency is key, so it would be remiss of us to not share some results around this

Whilst the majority of virtual machines exhibit a fairly good degree of uniformity of performance, there exists a significant tail in the chart with variations that would be of concern. The colours indicate that AWS is generally more homogenous than the other two providers and there is also a clear (and expected) trend in an increase in consistency with CPU count.

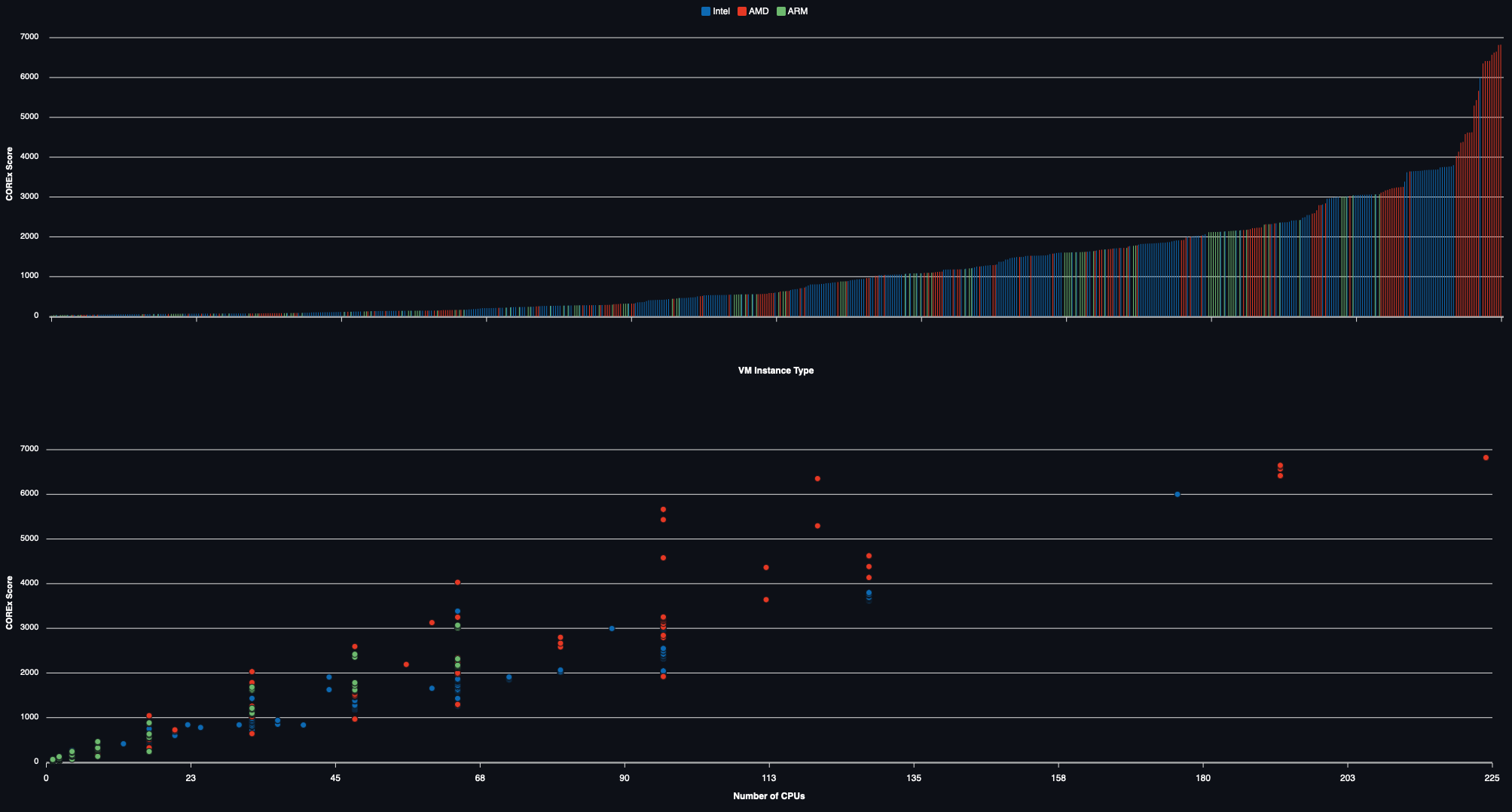

Results by CPU manufacturer

Another largely expected result is the domination of AMD at the high end of the machine scale, again largely due to the higher CPU counts (per VM). However, Intel’s latest generation of Sapphire Rapids CPUs has closed the gap significantly in this space. Where a year ago it would be been an obvious choice to adopt AMD machines, even if this is required some recompilation or development work, the decision is a lot less clear cut at this point and will depend greatly on the adoption costs and required timescales.

Looking at the COREx score per CPU by CPU manufacturer instead of cloud vendor similarly reveals a distinct dominance of AMD and (surprisingly, but perhaps it shouldn’t be) ARM CPUs at the upper end of the scale.

No silver bullets

More than anything else, these results highlight that there isn’t a simple answer to selecting the correct VM type or even the “best” Cloud provider or CPU manufacturer. There isn’t a clear correlation between the CPU count and the performance of virtual machines either.

A conclusion that will be of little surprise to anyone with HPC experience, the only way to optimise the system is to have effective performance testing (and metrics) in place. As a reminder, all the above results are based on the COREx (LINK) benchmark which may or may not correlate well with your own financial risk workloads.